The Military and Dual Use Neuroscience, Part II

In a previous post, I discussed several promising neuroscience technologies

currently under investigation by the military. Simply knowing that such

technology exists, however, does not in itself dictate a way forward for

neuroscientists and others who are concerned about the possible consequences of

military neuroscience research. In part, the complexity of the situation

derives from the diversity of possible viewpoints involved: an individual’s

beliefs about military neuroscience technology likely stem as much from beliefs

about the military in general, or technological advancement in general, as from

beliefs about the specific applications of the neuroscientific technologies in

question.

With that in mind, I think there are at

least three distinct angles from which an individual might find him or herself

concerned with respect to military neuroscience research. First, there are

those who are opposed to neuroscientific advancement in general due to its

potential implications on identity, moral responsibility, and human nature. Second,

there are those who may be amenable to scientific advancement in general, but

who distrust the military and are therefore suspicious of any technology that may

improve its combat capability. Finally, there are those who have no a priori problem with either the

military or technological advancement, but who have concerns about the ways

that particular technologies may be deployed within a military context.

Technological

Skeptics

Perhaps the most well-known skeptic of biotechnological

advancement is political scientist Francis Fukuyama, known largely for his 1989 prediction that the fall of the

Soviet Union would usher in the “end of history.” While Fukuyama does not oppose all biotechnological

advancement, his 2002 book Our Posthuman

Future: Consequences of the Biotechnology Revolution raises concerns about a number of particularly revolutionary

biotechnologies. For Fukuyama, belief in human equality depends upon a consensus

that there are certain essential qualities that unite all human beings. Modern

liberal societies, he argues, are notable in that they attribute essential

humanness to a range of persons – women, for instance, and racial minorities –

to whom such respect has historically been denied. Fukuyama’s fear is that

novel biotechnologies may undermine our collective belief in essential

humanness, and consequently the philosophical basis for political equality. One

area of particular concern for Fukuyama is neuropharmacology, where he predicts

the development of “sophisticated psychotropic drugs with more powerful and

targeted effects” with the capacity “to enhance intelligence, memory, emotional

sensitivity, and sexuality.” Many of Fukuyama’s arguments involve potential

inequalities generated through genetic engineering, but it is not difficult to

extend his logic to many neurotechnologies – for instance, brain-controlled prosthetic limbs,

or transcranial magnetic stimulation – currently under investigation by the military.

Fukuyama, and others who hold similar belief

systems, tend to oppose a wide range of military and civilian biotechnologies

rather than military neuroscience per se.

Such viewpoints, however, hold particular implications for military research if only because DARPA tends to be very, very good at what it does. Historically,

the military has played key roles in the development of microchips, cell

phones, GPS, and the Internet.

This is no coincidence: compared to other research programs, DARPA researchers

enjoy a number of unique advantages. In Mind Wars, bioethicist Jonathan Moreno points out

that “in the DARPA framework decades of development are acceptable” and that,

according to the DARPA strategic plan, “its only

charter is radical innovation.” That DARPA’s innovation is of this radical kind should constitute a point

of concern for those who believe that neuroscientific research is already

progressing too quickly. While private pharmaceutical firms might develop drugs

targeted to specific symptoms or diseases, DARPA has a particular incentive to

invent drugs that produce what might reasonably be called “superhumans”: drugs

that make humans more intelligent, that increase human endurance and stamina,

or that substantially enhance human physical performance.

Constraining The

Military

Other concerns

stem from the nature of the military itself: as an institution that uses force

to achieve political ends, it is worth asking whether military neuroscience

should be avoided insofar as it renders the military better capable of

achieving these ends.

One area of

concern relates to privacy, where several new technologies suggest a fundamental shift in the nature of state surveillance. In

my first post on military neuroscience, I discussed the Veritas TruthWave EEG

helmet and fMRI lie detection as two especially notable surveillance technologies. While both technologies have significant drawbacks – accuracy in the

case of the EEG helmet, and usability in the case of fMRI – such advances may

nevertheless have worrisome implications. An article on TruthWave raises one relevant concern regarding false positives: “When

a person’s life or freedom is at stake, what is an acceptable margin of error?”

In at least some cases – drone strikes on suspected terrorists, for instance, in

which “all military-age males in a strike zone” are assumed to be enemy combatants – the military has demonstrated a tendency to set the “acceptable margin of error” higher than it might be in, say, a court of law. The present generation of mind-reading technologies occupy a grey area in the sense that they’re likely accurate enough to be more reliable than intuition, but not so accurate as to reliably avoid false positives. In conjunction with high-stress combat situations or high-priority military objectives, this grey area may become particularly difficult to negotiate.

Further, it is possible that some innovations in military neuroscience may lower the cost of warfare, increasing the likelihood of intervention and violent conflict. In a 2005 article, Arizona State engineering and ethics professor Brad Allenby suggests that “military prowess, embodied in incredibly potent technological capabilities, acts like a drug, leading to dysfunctionally oversimplistic policy choices,” citing the Iraq war as a prominent example. Innovations in remote-operated robotic weapons – commonly known as UAVs, or “drones” – have already increased the U.S. military’s propensity to engage in combat operations. As one Washington Post editorialist puts it: “The detachment with which the United States can inflict death upon our enemies is surely one reason why U.S. military involvement around the world has expanded over the past two decades.”[1] Military neurotechnology seems poised to reinforce and accelerate this trend. Neurologically-controlled robots, for instance, may drastically improve the effectiveness and flexibility of current UAVs. More broadly, it seems likely that a war we are more likely to win is also a war that we are more likely to fight: in that sense, cognitively-enhanced soldiers, innovative prosthetics, and improved surveillance may all foreshadow a similar future involving a more powerful, more active U.S. military.

Legality And

Other Issues

Many problematic aspects of new military

neuroscience technologies derive simply from the fact that they are new. Consequently,

many current international legal frameworks designed to constrain warfare may

not apply to new neuroscience technologies.

Concerns of this sort are discussed at

length in the U.K. Royal Society report. For instance, newly-developed pharmaceuticals may have uses

in the interrogation of suspected terrorists or prisoners of war. Coercion of

POWs is outlawed by the Geneva Convention, as are medical procedures contrary

to “the rules of medical ethics.” The extent to which the Geneva Convention

applies to terrorists, however, has been a matter of dispute.

Given recent reports of coercive pharmaceutical use at Guantanamo Bay,

concerns of this nature are particularly salient.

The deployment of novel chemical

incapacitants in combat raises complex legal questions as well. The Chemical Weapons Convention (CWC), an

international agreement ratified by the U.S. in 1997, bans the use of chemical

weapons in conflict. At times, however, the CWC can be ambiguous: according to a U.K.

Royal Society report on neuroscience and conflict, parts of the CWC may be interpreted to allow “the use

of toxic chemicals to enforce domestic law extra-jurisdictionally or to enforce

international law.” Insofar as international law and extra-judicial enforcement

of domestic law are themselves open to interpretation, the CWC may leave the door open to some offensive uses of

chemical weapons.

Finally, the use of cognitive-enhancing drugs to aid friendly soldiers is complicated by a provision of the

Uniform Code of Military Justice requiring servicemembers “to accept medical

interventions that make them fit for duty.” On one hand, novel enhancement drugs might provide safer alternatives to

currently-employed stimulants such as amphetamines,

thereby lowering the health risks to military personnel. Alternatively,

however, safer drugs could lower the costs associated with coercive drug employment,

resulting in a lower threshold for coercion of military servicemembers.

It’s Not All Bad

Depending on your perspective, of

course, what I have called “concerns” may in fact be substantial benefits. I'm certain that leading transhumanist Nick Bostrom sincerely looks forward to to brain-computer interfaces, and that pro-military foreign policy analyst Robert Kagan wholeheartedly supports technologies that sustain U.S.

military dominance. More generally, neurologically-enabled prosthetics have obvious

benefits for disabled persons,

and civilian spin-offs of many military neurotechnologies hold considerable

medical and commercial promise.

Nevertheless,

there is a large enough breadth of military neuroscience research, and an

similarly diverse set of ideological positions from which to criticize it, that any

given individual is likely to find something

in DARPA that they consider worthy of concern. Coming to such a conclusion,

however, is only a first step, and the second step: “what to do about it?” – does

not have a simple answer. To a large extent, those who publish on the

implications of dual-use neuroscience technology have made similar suggestions.

The Bulletin of Atomic Scientists, for instance, recommends greater interdisciplinary

discussion on dual-use technology, and a 2003 Nature editorial suggests that “researchers should perhaps spend more time pondering the

intentions of the people who fund their work.” While these publications are

certainly correct in identifying a need for additional discussion on dual use

technology, I’ve personally found myself frustrated at the lack of specific,

practical suggestions in much of the literature.

It is Jonathan Moreno who, to my knowledge, has offered the most concrete suggestions for coping with the implications of military neuroscience. In Mind Wars, Moreno warns neuroscientists against rejecting all military funding, arguing that such a position would only push DARPA research into greater secrecy. If military neuroscience research is inevitable, he reasons, it is better for it to be done transparently (and therefore in some sense democratically) than for it to be done underground, where it cannot be criticized simply because it is not known about. Instead, he recommends that neuroscientists attempt to influence public discussion on military neuroscience in a similar fashion to scientists who opposed nuclear weapons use during the Cold War. From a regulatory perspective, Moreno further recommends the creation of a “neurosecurity equivalent to the National Security Advisory Board for Biosecurity,” a committee that would include scientific input from a range of disciplines, including neuroethics. Moreno is also optimistic that at least some in the defense industry might take ethical issues seriously, citing one defense contractor who has “had ethics advice from the very beginning [of a brain-machine interface project].”

I don't agree with all of Moreno's suggestions - I think, for instance, that there's something to be said for the symbolic value of refusing to work on a project that you disagree with - but I admire his attempt to provide concrete solutions to an issue that defies simple analysis. In a 2012 article in PLoS Biology, Moreno and Michael Tennison suggest that “discussions in themselves will not ensure that the translation of basic science into deployed product will proceed ethically... These considerations must be embedded and explored at various levels in society: upstream in the minds and goals of scientists, downstream in the creation of advisory bodies, and broadly in the public at large.” Whether and how the ethics of military neuroscience are “embedded and explored” have yet to be determined, but the answer to these questions will have significant implications for the control and ultimate consequences military neuroscience research.

[1] Though it’s worth noting that the author, John Pike of Globalsecurity.org, considers this to be basically a good thing, writing that “the large-scale organized killing that has characterized six millenniums of human history could be ended by the fiat of the American Peace.”

Want to cite this post?

Gordon , R. (2012). The Military and Dual Use Neuroscience, Part II. The Neuroethics Blog. Retrieved on

, from http://www.theneuroethicsblog.com/2012/10/the-military-and-dual-use-neuroscience.html

currently under investigation by the military. Simply knowing that such

technology exists, however, does not in itself dictate a way forward for

neuroscientists and others who are concerned about the possible consequences of

military neuroscience research. In part, the complexity of the situation

derives from the diversity of possible viewpoints involved: an individual’s

beliefs about military neuroscience technology likely stem as much from beliefs

about the military in general, or technological advancement in general, as from

beliefs about the specific applications of the neuroscientific technologies in

question.

|

| Star Trek's Commander Spock was generally ethical in his personal use of directed energy weapons, but not all of us are blessed with a Vulcan’s keen sense of right and wrong (Image). |

With that in mind, I think there are at

least three distinct angles from which an individual might find him or herself

concerned with respect to military neuroscience research. First, there are

those who are opposed to neuroscientific advancement in general due to its

potential implications on identity, moral responsibility, and human nature. Second,

there are those who may be amenable to scientific advancement in general, but

who distrust the military and are therefore suspicious of any technology that may

improve its combat capability. Finally, there are those who have no a priori problem with either the

military or technological advancement, but who have concerns about the ways

that particular technologies may be deployed within a military context.

Technological

Skeptics

Perhaps the most well-known skeptic of biotechnological

advancement is political scientist Francis Fukuyama, known largely for his 1989 prediction that the fall of the

Soviet Union would usher in the “end of history.” While Fukuyama does not oppose all biotechnological

advancement, his 2002 book Our Posthuman

Future: Consequences of the Biotechnology Revolution raises concerns about a number of particularly revolutionary

biotechnologies. For Fukuyama, belief in human equality depends upon a consensus

that there are certain essential qualities that unite all human beings. Modern

liberal societies, he argues, are notable in that they attribute essential

humanness to a range of persons – women, for instance, and racial minorities –

to whom such respect has historically been denied. Fukuyama’s fear is that

novel biotechnologies may undermine our collective belief in essential

humanness, and consequently the philosophical basis for political equality. One

area of particular concern for Fukuyama is neuropharmacology, where he predicts

the development of “sophisticated psychotropic drugs with more powerful and

targeted effects” with the capacity “to enhance intelligence, memory, emotional

sensitivity, and sexuality.” Many of Fukuyama’s arguments involve potential

inequalities generated through genetic engineering, but it is not difficult to

extend his logic to many neurotechnologies – for instance, brain-controlled prosthetic limbs,

or transcranial magnetic stimulation – currently under investigation by the military.

|

| Francis Fukuyama, author of Our Posthuman Future |

Fukuyama, and others who hold similar belief

systems, tend to oppose a wide range of military and civilian biotechnologies

rather than military neuroscience per se.

Such viewpoints, however, hold particular implications for military research if only because DARPA tends to be very, very good at what it does. Historically,

the military has played key roles in the development of microchips, cell

phones, GPS, and the Internet.

This is no coincidence: compared to other research programs, DARPA researchers

enjoy a number of unique advantages. In Mind Wars, bioethicist Jonathan Moreno points out

that “in the DARPA framework decades of development are acceptable” and that,

according to the DARPA strategic plan, “its only

charter is radical innovation.” That DARPA’s innovation is of this radical kind should constitute a point

of concern for those who believe that neuroscientific research is already

progressing too quickly. While private pharmaceutical firms might develop drugs

targeted to specific symptoms or diseases, DARPA has a particular incentive to

invent drugs that produce what might reasonably be called “superhumans”: drugs

that make humans more intelligent, that increase human endurance and stamina,

or that substantially enhance human physical performance.

Constraining The

Military

Other concerns

stem from the nature of the military itself: as an institution that uses force

to achieve political ends, it is worth asking whether military neuroscience

should be avoided insofar as it renders the military better capable of

achieving these ends.

One area of

concern relates to privacy, where several new technologies suggest a fundamental shift in the nature of state surveillance. In

my first post on military neuroscience, I discussed the Veritas TruthWave EEG

helmet and fMRI lie detection as two especially notable surveillance technologies. While both technologies have significant drawbacks – accuracy in the

case of the EEG helmet, and usability in the case of fMRI – such advances may

nevertheless have worrisome implications. An article on TruthWave raises one relevant concern regarding false positives: “When

a person’s life or freedom is at stake, what is an acceptable margin of error?”

In at least some cases – drone strikes on suspected terrorists, for instance, in

which “all military-age males in a strike zone” are assumed to be enemy combatants – the military has demonstrated a tendency to set the “acceptable margin of error” higher than it might be in, say, a court of law. The present generation of mind-reading technologies occupy a grey area in the sense that they’re likely accurate enough to be more reliable than intuition, but not so accurate as to reliably avoid false positives. In conjunction with high-stress combat situations or high-priority military objectives, this grey area may become particularly difficult to negotiate.

Further, it is possible that some innovations in military neuroscience may lower the cost of warfare, increasing the likelihood of intervention and violent conflict. In a 2005 article, Arizona State engineering and ethics professor Brad Allenby suggests that “military prowess, embodied in incredibly potent technological capabilities, acts like a drug, leading to dysfunctionally oversimplistic policy choices,” citing the Iraq war as a prominent example. Innovations in remote-operated robotic weapons – commonly known as UAVs, or “drones” – have already increased the U.S. military’s propensity to engage in combat operations. As one Washington Post editorialist puts it: “The detachment with which the United States can inflict death upon our enemies is surely one reason why U.S. military involvement around the world has expanded over the past two decades.”[1] Military neurotechnology seems poised to reinforce and accelerate this trend. Neurologically-controlled robots, for instance, may drastically improve the effectiveness and flexibility of current UAVs. More broadly, it seems likely that a war we are more likely to win is also a war that we are more likely to fight: in that sense, cognitively-enhanced soldiers, innovative prosthetics, and improved surveillance may all foreshadow a similar future involving a more powerful, more active U.S. military.

|

| UAVs, or “drones,” have likely decreased the U.S. military's threshold for global interventions (Image) |

Legality And

Other Issues

Many problematic aspects of new military

neuroscience technologies derive simply from the fact that they are new. Consequently,

many current international legal frameworks designed to constrain warfare may

not apply to new neuroscience technologies.

Concerns of this sort are discussed at

length in the U.K. Royal Society report. For instance, newly-developed pharmaceuticals may have uses

in the interrogation of suspected terrorists or prisoners of war. Coercion of

POWs is outlawed by the Geneva Convention, as are medical procedures contrary

to “the rules of medical ethics.” The extent to which the Geneva Convention

applies to terrorists, however, has been a matter of dispute.

Given recent reports of coercive pharmaceutical use at Guantanamo Bay,

concerns of this nature are particularly salient.

|

| As is often the case, key questions of ethics and policy hinge on exactly how similar our universe is to “24” (Image). |

The deployment of novel chemical

incapacitants in combat raises complex legal questions as well. The Chemical Weapons Convention (CWC), an

international agreement ratified by the U.S. in 1997, bans the use of chemical

weapons in conflict. At times, however, the CWC can be ambiguous: according to a U.K.

Royal Society report on neuroscience and conflict, parts of the CWC may be interpreted to allow “the use

of toxic chemicals to enforce domestic law extra-jurisdictionally or to enforce

international law.” Insofar as international law and extra-judicial enforcement

of domestic law are themselves open to interpretation, the CWC may leave the door open to some offensive uses of

chemical weapons.

Finally, the use of cognitive-enhancing drugs to aid friendly soldiers is complicated by a provision of the

Uniform Code of Military Justice requiring servicemembers “to accept medical

interventions that make them fit for duty.” On one hand, novel enhancement drugs might provide safer alternatives to

currently-employed stimulants such as amphetamines,

thereby lowering the health risks to military personnel. Alternatively,

however, safer drugs could lower the costs associated with coercive drug employment,

resulting in a lower threshold for coercion of military servicemembers.

It’s Not All Bad

Depending on your perspective, of

course, what I have called “concerns” may in fact be substantial benefits. I'm certain that leading transhumanist Nick Bostrom sincerely looks forward to to brain-computer interfaces, and that pro-military foreign policy analyst Robert Kagan wholeheartedly supports technologies that sustain U.S.

military dominance. More generally, neurologically-enabled prosthetics have obvious

benefits for disabled persons,

and civilian spin-offs of many military neurotechnologies hold considerable

medical and commercial promise.

|

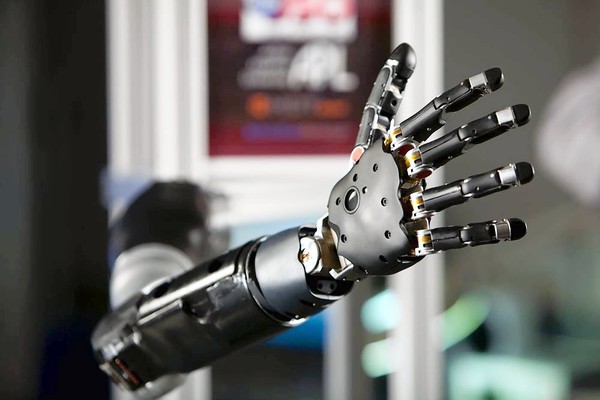

| A DARPA-produced prosthetic arm (Image) |

Nevertheless,

there is a large enough breadth of military neuroscience research, and an

similarly diverse set of ideological positions from which to criticize it, that any

given individual is likely to find something

in DARPA that they consider worthy of concern. Coming to such a conclusion,

however, is only a first step, and the second step: “what to do about it?” – does

not have a simple answer. To a large extent, those who publish on the

implications of dual-use neuroscience technology have made similar suggestions.

The Bulletin of Atomic Scientists, for instance, recommends greater interdisciplinary

discussion on dual-use technology, and a 2003 Nature editorial suggests that “researchers should perhaps spend more time pondering the

intentions of the people who fund their work.” While these publications are

certainly correct in identifying a need for additional discussion on dual use

technology, I’ve personally found myself frustrated at the lack of specific,

practical suggestions in much of the literature.

It is Jonathan Moreno who, to my knowledge, has offered the most concrete suggestions for coping with the implications of military neuroscience. In Mind Wars, Moreno warns neuroscientists against rejecting all military funding, arguing that such a position would only push DARPA research into greater secrecy. If military neuroscience research is inevitable, he reasons, it is better for it to be done transparently (and therefore in some sense democratically) than for it to be done underground, where it cannot be criticized simply because it is not known about. Instead, he recommends that neuroscientists attempt to influence public discussion on military neuroscience in a similar fashion to scientists who opposed nuclear weapons use during the Cold War. From a regulatory perspective, Moreno further recommends the creation of a “neurosecurity equivalent to the National Security Advisory Board for Biosecurity,” a committee that would include scientific input from a range of disciplines, including neuroethics. Moreno is also optimistic that at least some in the defense industry might take ethical issues seriously, citing one defense contractor who has “had ethics advice from the very beginning [of a brain-machine interface project].”

I don't agree with all of Moreno's suggestions - I think, for instance, that there's something to be said for the symbolic value of refusing to work on a project that you disagree with - but I admire his attempt to provide concrete solutions to an issue that defies simple analysis. In a 2012 article in PLoS Biology, Moreno and Michael Tennison suggest that “discussions in themselves will not ensure that the translation of basic science into deployed product will proceed ethically... These considerations must be embedded and explored at various levels in society: upstream in the minds and goals of scientists, downstream in the creation of advisory bodies, and broadly in the public at large.” Whether and how the ethics of military neuroscience are “embedded and explored” have yet to be determined, but the answer to these questions will have significant implications for the control and ultimate consequences military neuroscience research.

[1] Though it’s worth noting that the author, John Pike of Globalsecurity.org, considers this to be basically a good thing, writing that “the large-scale organized killing that has characterized six millenniums of human history could be ended by the fiat of the American Peace.”

Want to cite this post?

Gordon , R. (2012). The Military and Dual Use Neuroscience, Part II. The Neuroethics Blog. Retrieved on

, from http://www.theneuroethicsblog.com/2012/10/the-military-and-dual-use-neuroscience.html

Thanks, Ross, for the excellent article on a topic I think about a lot. I am a researcher who is a pacifist and who refuses any funding from military establishments. I agree with Moreno's statement that researchers should ponder the intentions of those who fund their work. In a climate of funding scarcity, it is very enticing to ignore or suppress one's scruples and follow the funding, wherever it may come from. Yes, some of DARPA's projects have useful civilian spin-offs, but I see every so-called success from DOD funded research as resulting in funding being drained away from the non-military government agencies that fund neuro research, the NIH and the NSF. So regardless of what the DOD's intentions are for research it funds, I won't help them suck the lifeblood (limited federal tax revenue) from the NIH and the NSF.

ReplyDelete