The Ethical Design of Intelligent Robots

By Sunidhi Ramesh

|

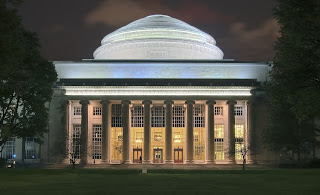

| The main dome of the Massachusetts Institute of Technology (MIT). (Image courtesy of Wikimedia.) |

The morning of February 1, 2018, MIT President L. Rafael Reif sent an email addressed to the entire institute community. In it was an announcement introducing the world to a new era of innovation—the MIT Intelligence Quest, or MIT IQ.

Formulated to “advance the science and engineering of both human and machine intelligence,” the project aims “to discover the foundations of human intelligence and drive the development of technological tools that can positively influence virtually every aspect of society.” The kicker? MIT IQ not only exists to develop these futuristic technologies, but it also seeks to “investigate the social and ethical implications of advanced analytical and predictive tools.”

In other words, one of the most famous and highly ranked universities in the world has dedicated itself to preemptively consider the consequences of the future of technology while simultaneously developing that same technology in hopes of making a “better world.”

But what could these consequences be? Are there already tangible costs incurred from our current advances in robotics and artificial intelligence (AI)? What can we learn from the mistakes we make today to cater to a more just, whole, and objective tomorrow?

These questions are similar to the ones posed by Dr. Ayanna Howard at the inaugural The Future Now NEEDs... (Neurotechnologies and Emerging Ethical Dilemmas) talk on January 29th at Emory University. Speaking to the Ethical Design of Intelligent Robots, Dr. Howard presented a series of lessons and considerations concerning modern-day robotics— many of which will guide the remainder of this post.

But, before I discuss the ethics hidden between the lines of robotic design, I’d like to pose a fundamental question about the nature of human-robot interactions: do humans trust robots? And I’m not talking about whether or not humans would say they do; I’m asking about trust based on behavior. Do we, today, trust robots so much that we would turn to them to guide us out of high-risk situations?

|

| A line of prototype robots developed by Honda. (Image courtesy of Wikimedia.) |

You’re probably shaking your head no. But Dr. Howard’s research suggests otherwise.

In a 2016 study (1), a team of Georgia Tech scholars formulated a simulation in which 26 volunteers interacted “with a robot in a non-emergency task to experience its behavior and then [chose] whether [or not] to follow the robot’s instructions in an emergency.” To the researchers’ surprise (and unease), in this “emergency” situation (complete with artificial smoke and fire alarms), “all [of the] participants followed the robot in the emergency, despite half observing the same robot perform poorly [making errors by spinning, etc.] in a navigation guidance task just minutes before… even when the robot pointed to a dark room with no discernible exit, the majority of people did not choose to safely exit the way they entered.” It seems that we not only trust robots, but we also do so almost blindly.

The investigators proceeded to label this tendency as a concerning and alarming display of overtrust of robots—an overtrust that applied even to robots that showed indications of not being trustworthy.

Not convinced? Let’s consider the recent Tesla self-driving car crashes. How, you may ask, could a self-driving car barrel into parked vehicles when the driver is still able to override the autopilot machinery and manually stop the vehicle in seemingly dangerous situations? Yet, these accidents have happened. Numerous times.

The answer may, again, lie in overtrust. “My Tesla knows when to stop,” such a driver may think. Yet, as the car lurches uncomfortably into a position that would push the rest of us to slam onto our breaks, a driver in a self-driving car (and an unknowing victim of this overtrust) still has faith in the technology.

“My Tesla knows when to stop.” Until it doesn’t. And it’s too late.

|

| What will a future of human-robot interaction look like? (Image courtesy of Wikimedia.) |

Now, don’t get me wrong. Trust is good. It is something that we rely on every single day (2); in fact, it is a critical component of our modern society. And, in an increasingly probable future of commonplace human-robot interaction, trust will undeniably play an increasingly significant role. Not many will disagree that we should be able to trust our robots if we are to interact with them positively.

But what are the dangers of overtrust? If we already trust robots this much today, how will this trust evolve as robots become more versatile? More universal? More human? Is there a potential for abuse here? The answer, Dr. Howard warns, is an outright yes.

Within this discussion about trust lies another, more subtle line of questioning—one about bias.

Robots, engineered by the human mind, will inherently carry human biases. Even the best programmer with the best intentions will, unintentionally, produce technology that is partial to his/her own experiences. So, why is this a problem?

Consider the Google algorithm that made headlines in mid-2015 for “showing prestigious job ads to men but not to women.” Or the Flickr image recognition tool that tagged black users as “gorillas” or “animals.” Or the 2013 Harvard study that found that “first names, previously identified as being assigned at birth to more black than white babies… generated [Google] ads suggestive of an arrest.”

This problem is neither new nor unique; ads and algorithms programmed by humans (intentionally or unintentionally) inherit the sexist and racist tendencies carried by those humans.

|

| (Image courtesy of Flickr.) |

And there’s more. North Dakota’s police drones have been legally armed with weapons such as “tear gas, rubber bullets, beanbags, pepper spray, and tasers” for over two years. How do we know that the software being used in these systems are trustworthy? That they have been rigorously monitored and tested for aspects of bias? Whose value systems are being inputted here? And do we trust them enough to trust the robots involved?

As our world continues to tumble forward into a future immersed intricately with technology, these questions must be addressed. Robotics development teams should include members with an extensive diversity of thought, spanning economic, gender, ethnic, and even “tech” (referring to a diversity in technical training) lines to mitigate biases that may negatively impact the robots’, well, intelligence. (Granted, bias is inherent to humanity, so there is a danger in thinking that we could ever objectively produce robots that are entirely unbiased. Still, it is a step in the right direction to at least recognize that bias may present itself as a problem and to actively, proactively attempt to avoid blatant manifestations of it.)

To answer the question of reducing bias in the future, Dr. Howard ventured so far as to suggest a sort of criminal robot court— one that would rigorously and strenuously test our robots before they are put in the hands of the real world. Within it would be a “law system” that evaluates the hundreds of thousands of inputs and their associated outputs in an attempt to catch coding errors long before they have the potential to impact society on a larger level.

So, in a lot of ways, we are in the midst of a golden era. We can still ask these questions in the hope of presenting them to the world to answer; technology can be molded to be what we want it to be. And, as time goes on, robotics and AI will together become an irrefutable aspect of the future of the human condition. Of human identity.

What better time to question the social consequences of robotic programming than now?

Maybe MIT is up to something big after all.

References

1. Robinette, Paul, et al. "Overtrust of robots in emergency evacuation scenarios." Human-Robot Interaction (HRI), 2016 11th ACM/IEEE International Conference on. IEEE, 2016.

2. Zak, Paul J., Robert Kurzban, and William T. Matzner. "The neurobiology of trust." Annals of the New York Academy of Sciences 1032.1 (2004): 224-227.

Want to cite this post?

Ramesh, Sunidhi. (2018). The Ethical Design of Intelligent Robots. The Neuroethics Blog. Retrieved on , from http://www.theneuroethicsblog.com/2018/02/the-ethical-design-of-intelligent-robots.html

Comments

Post a Comment